Quality intervention

The hidden cost of shipping Fast. How to push the boundaries without loosing customer and executive trust.

The Wakeup Call

‘Our revenue went from thousands to $165 and your software is costing us money.' When a small business owner told me this during COVID, it wasn't about our pricing and packaging anymore - it was about our product reliability. Here's how we transformed our approach to quality while maintaining growth.

It was a tough year for everybody. I walked down Fillmore street in San Francisco. Instead of a lively buzz of people at my neighborhood restaurants, I was met with shuttered businesses and empty dark streets. I was the GM of the Local Business line at Nextdoor, and overnight over 50% of businesses closed down, many more would close shortly after.

We threw our business strategy out the door. The teams galvanized, without me saying much, around the strategy of getting as many dollars into business pockets as we could. We quickly focused on adding features around touchless delivery, fundraising and gift cards, and paused subscription renewal work. In our rush to “meet the moment” we earned press hits, we got to market before our largest competitors and partnerships conversations became easy as prospects saw the value we were providing. Our speed was earned by quarters of investment in QA infrastructure, business cataloging and GraphQL to modularize our customer profiles, but our products and our teams started to show cracks.

Business Bundle for Local Businesses

Making the Case for Quality

Urged on by our busy customers, who have been overburdened by empty promises of marketing software that we owed it to them to have a reliable system. We decided to take a deliberate pause in the upcoming quarter to focus on quality. But how do you even do that, when the mantra for a GM is P&L. And that L was becoming predictably bigger? You have the conversation in the open and show leadership.

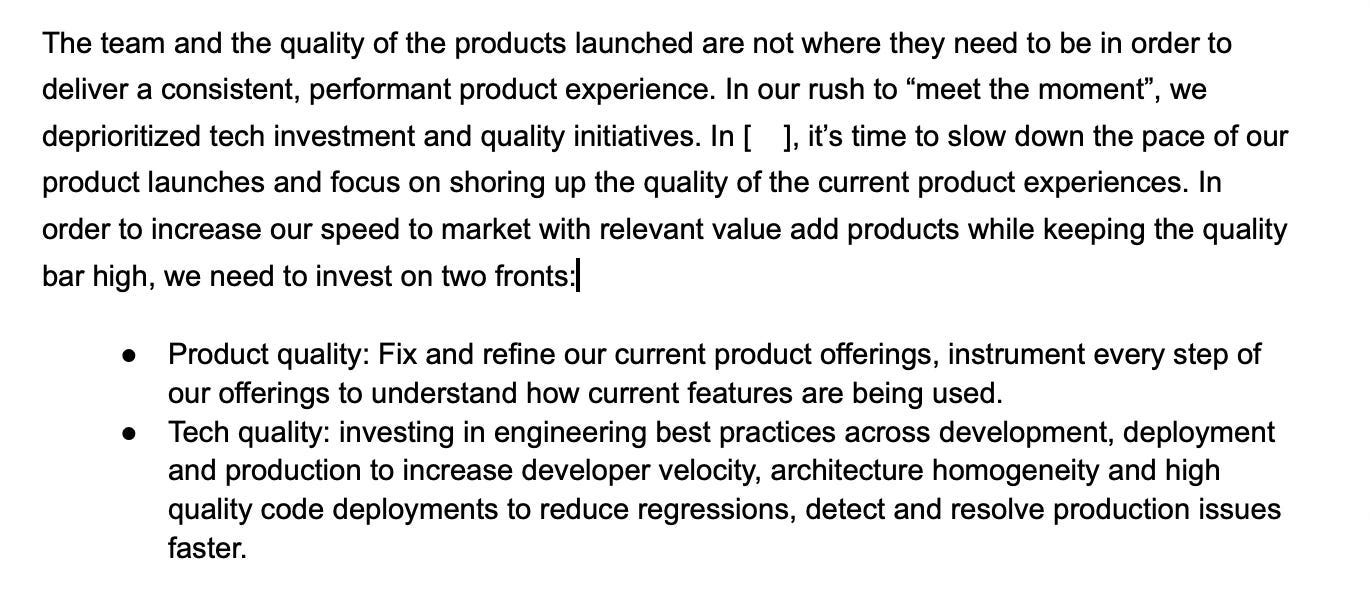

Here is an excerpt of the quarter’s overview. Below all of the press hits, the customer love and the heroics, here was the discussion point for the leadership conversation that day.

Feel free to take the above template, several PMs I coached had instances of delivering bad news and the framing has helped them. Turns out that sharing the bad news along with the plan of how you are going to fix things and not only sugar coating everything gives you, as a leader, much more credibility. Plus you won’t make much revenue from pissed off users that don’t trust you anymore.

Here is framing to make the case to leadership in a measurable way

Cost of quality issues

[x]% increase in support tickets

[y] enterprise customers paused expansion

[z] negative press mentions

Team spending [a]% time on fixes vs. features

Customer sentiment quotes

Team sentiment quotes

Now onto the hard part: the plan and the execution.

Building Quality Systems

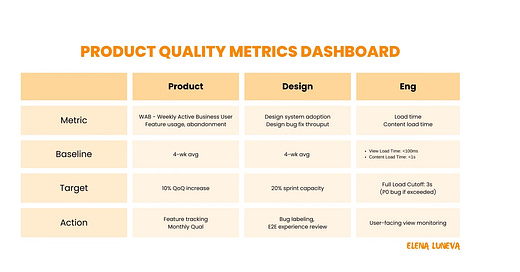

At this point most for our feedback about quality was anecdotal and we did not have the tracking in place to really measure our quarter investment. We needed to get a baseline understanding of product, design and engineering quality.

Quality Metrics

Product Quality

Weekly active businesses - increasing the percentage of our businesses that return and engage with our products. Those experiences were then measured by the various features that made up those experiences. That direct usage combined with qualitative feedback gave us overall business value by cohort, and if adding new functionality is actually increasing that value for users. Behind those metrics we then dove into the design and engineering aspects of the product to see where the detractors lay.

Design Quality

Design debt

In our frequent rush to ship the MVP many design and cosmetic bugs were filed and labeled, other times people would just notice and give-up, because there was not an understanding that there was someone to work on fixing these. Each sprint they would be deprioritized in favor of new features. No one ever works on P4s. With time the accumulation of these took away from the product experience as the experience started to look unfinished. We started with a design debt bug throughput baseline and established that at least 10% of the time would be devoted here.

I would say this is a rough measure – amount of tickets obviously doesn’t correspond directly to overall quality – but if the team commits to actually filing tickets and fixing them it changes the mindset from “no one cares”, to “we see these and we do” so it becomes a proxy for quality. Frequently tech debt is understood, but design is not with the same fervor and this focus helped us give the UX experience the attention it needed.

UI performance

User-facing views have performance requirements both visual and load time. Most users have the attention span of a goldfish and will bounce and never return if your pages take more than seconds to load. We had to establish baseline goals. Here is the mandate we put together.

“Every view must be visible in under 100ms, and with full content loading in under 1s. We should track progress towards this goal on every view. Any view that can’t fully load in 3s should be considered a P0 bug.”

Design system usage & native implementations

At Nextdoor we had a native design system called Blocks. Team usage of the components was a strong indicator of UI and brand consistency. Ours was let’s just say not at the baseline we should have been, and moving the team to use a consistent design system was good for brand and UX consistency but also for implementation time.

Another truth that had tradeoffs was that it was faster to build new experiences in webviews, non-native user experiences, that over time incurred load, consistency and other debt. Thus it was time for us to invest in iOS and Android development and move the local business experience into native paradigms. True this would take us more than the quarter time we allowed for quality, but we would make a dent in the quarter ahead.

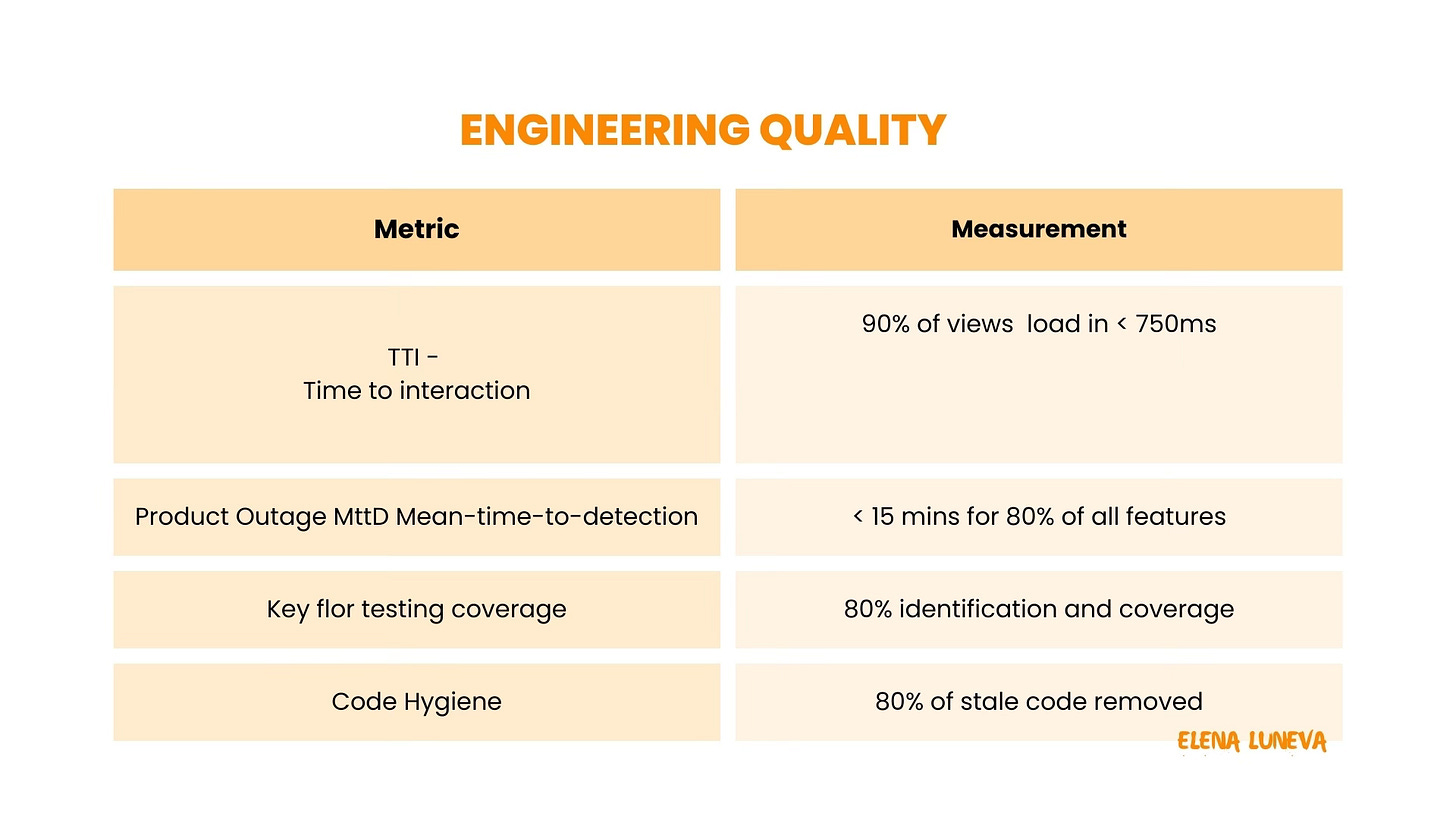

Engineering Quality

Just like with design a starting point can be a spike into what does the team actually know, what is instrumented to form the initial baselines and the path to increased product quality. As we discussed in the design quality aspects, up-time, load-time are all critical to overall user experience so they are a cross-team rather than individual problem as the way something is built frequently goes hand in hand with product, business and design direction. Nevertheless we started with TTI - time to interaction, Product outage MMTD - Mean time to Detection, Automated testing and QA testing critical flow identification and coverage, as well as overall code hygiene and readability. These focus areas consumed the rest of the sprint bandwidth for the quarter.

Implementing Quality Systems

When you find yourself with the need to establish your quality foundation. Spend the first 30 days on a comprehensive audit setting the baselines. This will help you understand your progress. Pull support ticket data, performance metrics, and technical debt inventory to understand your starting point. Work with leadership then tie these baselines to company OKRs. Tying quality directly to business outcomes keeps the initiatives in focus.

The next 30 days it’s all about quality focused execution. Prioritize fixes based on revenue impact and customer pain points. Roll out automated testing infrastructure and begin migrating to your design system. Track progress weekly against your established baselines. Quality metrics should become as routine as feature velocity in your team's rhythm. Establishing this muscle builds dividends after your get out of this focused quarter.

The final 30 days focus on validation and scaling your quality practices. Measure impact through reduced support tickets, improved performance metrics, and positive customer sentiment. Document your quality processes and train teams on new standards. Share wins and learnings broadly - especially with executives who supported the quality investment. Use this data to plan ongoing quality maintenance, as it is naive to think just this quarter pause will be sufficient going forward.

Craft & Quality

Speed to market is a common conflict to quality and process. A common complaint from the teams I work with, is that we will never come back to the next phase of the MVP. The answer is it depends. Most MVPs are that - market adoption tests, and a success often warrants that next feature iteration, rather than giving the successful team time to cleanup. However, just like a professional chef needs to re-set her station, so does a product team need to establish quality as a principle and be vigilant in pressing pause in order to have a more effective next sprint when the dust of MVP settles. You don’t get the right to innovate until the fundamentals work.